Software Engineering

As AI-powered systems become ubiquitous, the demand of software solutions supporting its productionization becomes essential. The recent success of Large Language Models would not be possible without proper software solutions to scale training and inference of such model. At the same time, the use of Artificial Intelligence and Machine Learning for novel software solutions enables new kinds of hardware, such as FPGA-based Machine Learning accelerators and specialized compilers for Machine Learning algorithms. The ScaDS.AI Dresden/Leipzig area of software engineering seeks to lift these opportunities to enable the development and optimization of the next generation of AI-based systems.

Research Focus

Specifically, we concentrate on three research areas: MLOps and productionization, support for FPGA-based Machine Learning-Accelerators, and Machine Learning-enabled compiler optimizations.

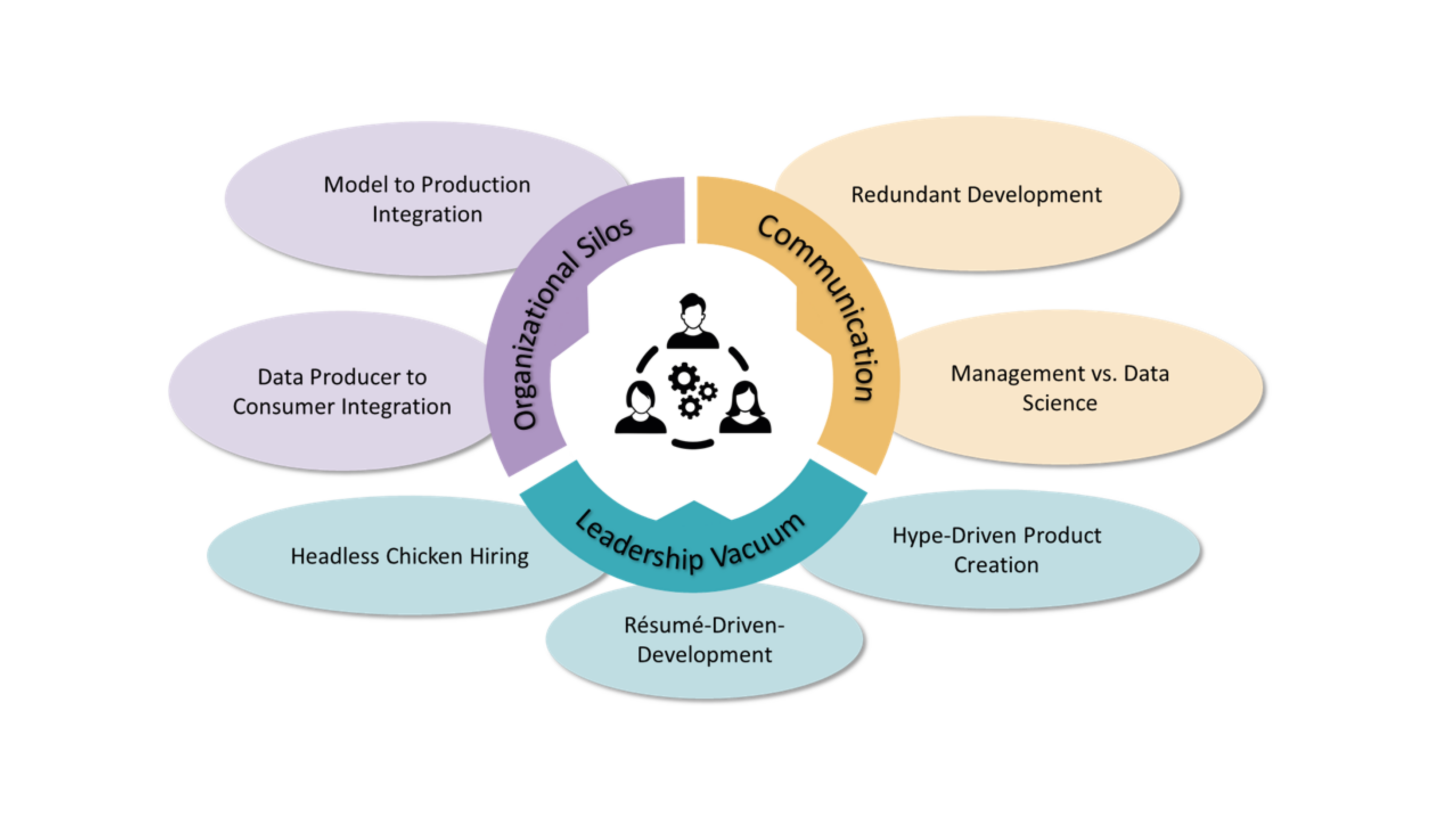

- First, we aim to uncover main obstacles and challenges to the productionization of Machine Learning models. This includes sociotechnical challenges among teams and organizational structures, as well as technological ambiguities of AI pipelines, especially for Large Language Model-based systems.

- Moreover, we aim to improve energy and performance efficiency of AI-based systems via modern sampling, learning, and Large Language Model-based techniques.

- Overall, the goal is to research on methods to improve the productionization of modern AI systems.

Aims

The overarching goal of our works is to empower an efficient usage of Artificial Intelligence methods and tools. That is, we strive to substantially improve performance and energy consumption of training, inference, and deployment of Machine Learning models and code, as well as enable novel hardware platforms. We follow a strategic approach of covering the full stack of AI-related aspects: From specialized hardware support (e.g., Machine Learning-focused FPGAs) overspecialized compilers for Machine Learning code to the integration of the Machine Learning model and code into the infrastructure and software stack (i.e., MLOps).